Hopefully, you’re not going to believe me when I tell you this; this isn’t just a random list of easily debunkable myths.

These are actual things, that real people that I’ve had conversations with have said…

From understandable mistakes to Tinfoil Hat SEO, these myths need to get annihilated…

Google’s Ranking Factors

This one is the absolute mother load.

To the person responsible for this, I hope you’re happy with yourself. About a million people are running around out there that think they need to be focused on optimizing their website for these “magic” ranking factors.

Many of these people are business owners and they think that these factors are a check-list of things that must be done.

It’s like an internet meme now…

Every time somebody starts talking about Google’s ranking factors, I already know that person can’t rank jack squat.

If I see somebody suggesting that “Well There’s Over 200 Ranking Factors,” my eyes roll back into my head so hard that I’m legitimately concerned about tearing nerves in my brain.

The sad part is, there’s some truth to it, so it can’t just be completely debunked.

Yeah, some factors are a pretty big deal.

But that’s the thing, the vast majority of people need to be focused on the most important factors, not the fact that there might be lots of them.

Optimizing for many of the supposed factors (many of them can’t even be proven), wouldn’t do anything at all for most people.

Now it’s true, that way back in the day, you could optimize your content based upon a bunch of factors like keyword density. I remember doing it, and I had a tool that would tell me that I needed to shove my keywords into my content so that I could rank on Excite and Hotbot back in the year 1998…

It worked like gangbusters 20 years ago…

But in the 21st century, everybody uses this thing called Google (maybe you’ve heard of it), and the big thing that was different about it (from the very start in 1998) was that it incorporates link data into the algorithm.

Today, most experienced SEOs would say that the link data (there are a bunch of link factors, it’s not just quantity of them) is the primary ranking factor.

Now, I’m not suggesting that you don’t need good content, but the vast majority of people need to focus on earning quality links from authoritative sites.

People seem to think that it’s 80% on-page and 20% off-page.

No, on competitive keywords, it’s the opposite, it’s more like 20% on-page to 80% off-page.

It seems that, if you have content that people want to link to and they’re actually linking to it; well then Google seems to like it as long as you can avoid some big problems.

Those include:

Having your website getting mauled by the animal algorithms, (Google likes to name their massively destructive algorithms after cute animals, like Panda and Penguin.)

You also need to avoid having your site getting locked up by the internet police and thrown in Google jail, (their manual reviewers pass out “pure spam” penalties all day long and those reviewers truly hate spam.)

Since these things all have to do with spam, just don’t spam, and your site should be fine.

So if John Mueller called me up on the phone and told me that “Hey Kevin, there are actually 9,188 ranking factors” I would say “Thanks dude, great job! I honestly couldn’t care less. You should go tweet about that now or something.”

The biggest thing is to separate out the factors that the algorithm cares about and the factors that your audience cares about.

At the end of the day, the sites that are going to earn the most quality links are going to be sites that people like the most.

There are endless human factors that I could talk about but you need to implement the things that matter to your site’s audience.

Understand though, Google probably cares much less about those factors than your audience does.

You don’t need Links to Rank on Competitive Keywords

The source of this myth is generally a bunch of experiments, where people end up writing tons and tons of content, where some of it ranks in Google for noncompetitive keywords (usually poorly), and then they say “hey look I ranked without links! You don’t need links to rank guys!”

Okay, that’s great that people can do that but that’s not really what professional SEOs are concerned with.

Google analyzes links, it’s part of the core algorithm. To pretend that you don’t need any links is just silly.

Links are a major component of the algorithm and have been for a very long time. Here’s the original paper from Stanford. It was published January 29, 1998.

If you don’t believe Stanford University, then you could go read Google’s About Page, which says:

“Working from their dorm rooms, they built a search engine that used links to determine the importance of individual pages on the World Wide Web.“

Do you think that Google is lying on their own about page?

Now if you want to be “purist” and do all of your content marketing through social media and paid advertising, you certainly can, but if you’re not marketing your content, you need to start doing it.

Quality links do not magically appear from nowhere, you must market your site to people who have the ability to link to it.

You Need to Build a PBN to Rank

This advice is horrible and comes from frustrated SEOs that can’t seem to be able to create content or sites that earn links.

That or for whatever reason, they can’t or are not willing to do outreach marketing to get the site going.

Now sometimes I admit, that it’s challenging to earn links in certain niches. Regarding that: I’m going to have to give advice that you may not want to hear: avoid those niches. There’s a reason that people won’t link to it.

If you think you need a PBN here’s something you should know: if Google figures out what you are doing, they are likely to penalize the entire network and your main site. If that happens, good luck recovering from that penalty. I’m pretty sure you only get one strike on a penalty like that, so if you decide to repeat this after the manual action is removed and get caught again, that domain is dead now.

I’m not suggesting that these networks don’t work to help rank sites, I’m saying that the risk of this behavior is highly understated.

It’s known among some people that the fastest and easiest way to “improve your rankings” is to play “internet police officer” and discover these sites and report them to the webspam team.

Simply put, if your competitor is penalized, well they’re no longer a competitor.

Me personally, I don’t do this since I don’t think I should be responsible for “cleaning up the internet,” but I know some people feel very differently about that.

Some of these PBN owners think “well whatever, as long as I hide my network from the SEO tools, I probably won’t get caught.”

Well, since some of the SEO tools are starting to “fill in the data gaps,” it’s going to be a pretty easy task for the people reporting sites.

If you’re reading this and have a PBN: Hey! Don’t blame me, I’m not the one filling in the “data gaps,” and I would highly encourage you to think about your exit plan, which would likely involve “decommissioning your network.”

I could have posted on how to reveal hidden networks using ScrapeBox and WGet years ago, and I chose not to, as again, it’s not my responsibility to clean up the internet.

Some people consider PBNs to be “Grey Hat SEO.”

I hate to break it to people that think that but there’s really only one way where it would be truly “grey area.”

There are people who have a private network that is at a quality level where it would be virtually impossible to figure out that it’s a private network because those sites look real, are well maintained, and they serve a purpose.

The reality of a network like that, is that they take so much time to build, that it’s probably a better use of your time to do white hat SEO.

If you’ve been building websites for years and have a lot of them, can Google really penalize you for linking one site you own to another?

I honestly have no idea, it’s going to be a case by case thing, which is why it’s called “Grey Hat SEO.”

Most of the time though, what PBN builders are actually doing is definitely blackhat SEO and it will get penalized if Google finds it.

Instant Rankings

If you see anybody talking about instant rankings, run immediately.

It just doesn’t work like that on competitive keywords, with very few exceptions.

Sometimes a site with absolutely massive authority (link juice/PageRank whatever you want to call it, I prefer the term ‘authority’ as it sounds professional), posts something, and it ranks quickly. I’ve seen it happen.

Or somebody targets an extremely non-competitive keyword and Google ranks it on page one because there’s nothing quality for it to rank.

I’ve seen this done repeatedly with YouTube videos since Google does seem to prefer to rank them over regular content. Believe it or not, you can actually get them indexed and ranked by pointing a nofollow link at the video.

Anytime somebody does this; I think: “Oh boy, somebody ranked a video on a keyword that gets three searches a month, great job…” /eyeroll

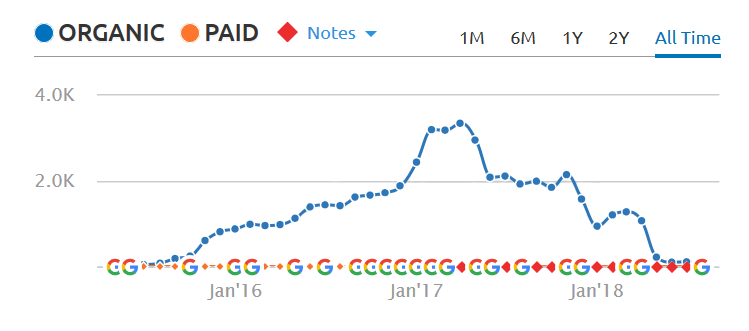

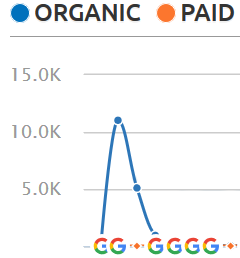

I’ve seen some cases where somebody will blast a page with a ton of spam links, (usually way beyond where the Penguin algorithm is going to eat it), and it will rank temporarily on keywords with some competition. This is pretty comical when looking at the organic traffic pattern in an SEO tool. The ranking has that “distinct traffic spike” and then flatlined rankings…

/sad trumpet 🎺 Great job, time for a new domain since disavowing all of that is probably a big waste of time. Note: I haven’t seen anybody do this since Penguin 4.0, but that doesn’t mean that somebody can’t figure it out.

Tip: You can rank quickly on a keyword if people are using a custom date modifier in the Google tools section, which not many people even know about.

The Google Sandbox

This is another fun one that mostly exists because people just don’t completely understand what’s going on.

This is pretty similar to the “instant rankings” myth. Basically, if Google doesn’t have a whole lot of information about your site and the links pointed to it, it’s not going to trust the small amount of data that it has.

So until those links have aged enough for Google to trust them, it’s pretty difficult to rank on competitive keywords.

Learning this fact discourages many people, but Google always does that. It doesn’t matter if it’s a new domain, it never really trusts the link data until that link has been there for a while. From experience, it’s about four-ish months but takes about a full year for the links to get factored entirely in.

So it’s not that there’s a “new site penalty,” it’s that none of the data that Google has on that site is trustworthy, so it doesn’t rank very well. Then once the site has links that Google does trust, this effect isn’t as noticeable, but it’s still there.

How severely this will affect your site largely depends on the competition level of the keywords, but the factor is called “Link Age,” and it is a real factor that you should be concerned about.

SEO Data Tools Are Useless Because They Are Not 100% Accurate

I’ve probably heard this in a conversation a dozen times.

There’s a lot of information that these tools do not have access to or are not able to collect. They also don’t get it right every single time.

You need to be able to interpret the data that the tools provide.

As an example, keyword difficult scores are based upon averages, so if the page that is ranking one has a ton of links, that is going to skew the average. In reality, it could be very easy to get content to page one for that keyword, even though that keyword difficulty score is high.

There’s also the fact that none of these tools have access to disavow files and have no way to identify what sites are penalized. This might not seem like a big deal but remember that since the internet is interconnected by links, the aggregate effect is substantial.

Also, I promise you that after an update, the Penguin algorithm is probably destroying one million+ rankings an hour. The outbound links from those sites are likely devalued, and the tools obviously can’t figure that out.

So because things like that occur, instead of understanding those things and trying to account for them, some people assume that the tools are completely useless.

Here’s the thing about this, from my experience, certain tools (the good ones that are not free) are actually pretty accurate. I can’t determine exactly how accurate they are, but I would certainly suggest that they are correct more often than they are wrong.

So if you were going to gamble, wouldn’t you rather play a game where the odds are in your favor?

What if the odds were in your favor 2 to 1?

You would definitely play that game…

Without any data tools, you’re completely blind, and I assure you that your competitors are not going to go about this blindfolded.

3,015 Myths About Keyword Research and Keyword Difficulty

Oh boy, I don’t even know where to get started with keyword difficulty myths. I’m just going to lump these all together and explain how not to fall into these traps.

My favorite is the classic; “I’m looking at the difficulty in AdWords.”

Yeah, that’s the competition in AdWords, not the organic results.

I haven’t seen this one too recently, but it used to be pretty common; people used to try to gauge the difficulty from the part of the Google result that says “About #” results.

That number seems to be entirely nonsensical and likely represents the approximate number of times each of the words appears on the internet. It has absolutely nothing to do with the competition level unless it returns a number like 10 or something.

What people are misunderstanding is how keyword difficulty actually works.

So you need to have good content on a decent site, but so do your competitors.

That content must be relevant to the search query, or it probably won’t rank.

For the most part, that just leaves the off page factors, so you need to be looking at those pages that you are competing against and looking at the links (both internal and external) that are pointed to those pages.

Since the “power” of those links is the important part and Google provides zero information about that these days, you’re going to need a tool to estimate that.

You’re also going to need some experience.

If you’re a blogger and you’re looking at a list of competing pages, and they all have a page authority of 35+, that might seem easy, but for a blogger, you’re probably totally screwed unless your content goes viral.

“LSI Keywords”

If you believe in LSI keywords, you’ve probably been tricked into thinking that synonyms for keywords are “LSI keywords.”

I know there are these websites that supposedly tell you the LSI keywords.

Right? 🤔

Nope, those are just similar words or phrases that are variations of your target keyword.

Now I admit that’s very helpful for reducing the amount of word repetition, but that’s not the purpose of a latent semantic analysis at all.

Not even close.

The purpose is to analyze the words on the page to develop relationships between those words to gain further understanding of their meaning and more importantly the topic of the content.

LSA is actually how Google separates topics from keywords and why sometimes a page will rank without out even using the target keyword.

So the real way to take advantage of LSI keywords would be to use similar vocabulary to pages that are already ranking for your target keyword in Google, not to use some tool that spews out variations of your target keyword.

This is also why it’s pretty difficult to rank a piece of content that is targeting the wrong keywords. So if your content is about the “history of bananas” it’s going to be pretty difficult to get that content to rank on the keyword “pilates vs yoga.” You can jam the keyword into that piece of content all you want; it won’t work…

Sorry, I know many people have gotten tricked by this myth.

It is what it is.

🙂

Going down the rabbit hole.

Shortly after publishing this article, I decided to take a look into what those tools “do” and I got scared pretty quick. There’s “SEO gurus,” hard to believe claims, talk about instant rankings, and this thing wants my credit card…

Since I know what LSA is, I made an attempt to reverse engineer those tools, which failed horribly as they don’t appear to be using search results for their data. I have absolutely no clue what those tools do but I would describe the results as either “gibberish” or what appears to be a list of phrase match keywords.

The one tool does appear to be doing some kind of semantic analysis, but it appears to be comparing the semantics of the words in the keyword. So you end up with a list of keywords that are semantically related, but the important part is that the topics are not necessarily related… That is not the goal of LSA, which is to identify semantic relationships between content by comparing it to other content, not to analyze the semantic relationships between keywords.

I can’t say that it’s a scam as it does seem to do exactly what it sounds like it does, but this is due to an inadequacy of the English language. Most people likely believe that it does an analysis to help them with their on-page SEO by identifying important words to include in their content to help Google understand it’s semantic relationships to keywords.

I’m not suggesting those tools are useless, but I don’t have or know of a use for them. ¯_(ツ)_/¯

All of the keywords that it produced, that I feel made sense to include in my content, were already in it. I’m definitely not going to include the irrelevant and spam keywords it suggested, so that was a big waste of my time.

Bounce Rate and Dwell Time

This one isn’t that bad of a myth since optimizing these factors does make sense, it just doesn’t make any sense that Google would care about them, as it has absolutely no way to collect that data.

Tip: They don’t use your analytics data since you can manipulate it and not every site on the internet uses Google Analytics.

This one fails the “I know how technology works and have common sense” test.

So here’s the truth about this: does reducing your bounce rate and increasing your dwell time help? Well sure, if the users are spending more time on your site that likely means that your content is quality, so in theory, more people will link to it.

So should you do this?

Yes, throw a heat map on your site and start testing but understand at the end of the day what this is actually doing for you.

You Should Put Your Keywords on Your About Page

This one actually comes from a supposed “SEO Guru.”

The more you know, the less sense this makes.

It leads me to believe that the person that wrote that article is not the owner of the site, as I’m pretty sure the owner of the site knows that it doesn’t matter and it’s complete nonsense.

If you tried to do this on a large site, it’s going to end up looking extraordinarily spammy and would probably hurt more than it helps (it doesn’t.)

The about page should be about you, your brand, your company, whatever.

The entire purpose of that page is to try to gain trust with people who want to know a little bit more about you, not a place for you to try to be optimizing the rest of your site for Google.

I do admit though since your about page is typically linked on every page of your site, if you wrote an excellent guide that stands out above the rest of your content, you could link to it from your about page as that page likely has a lot of authority.

It would also be a great “demonstration” of what you’re all about.

You Need To Bold The Keywords

So I was chatting with somebody on a forum who seemed to be somewhat knowledgeable, but I didn’t see any of their sites so I had no idea how skilled they were.

One day the person suggested that to improve your rankings that you need to be bolding your keywords…

Yeah uh, I kind of remember that from one of those tools I used back in 1998 suggesting that you should bold them.

Google doesn’t care about how you style your text, that would be a little bit too easy to manipulate.

Sorry, it’s just a silly theory.

All Automated Tools are Blackhat

This one bothers me; obviously, you can do whatever you want with the tools.

Some of the tools are known to have been abused by spammers, such as ScrapeBox, but that doesn’t mean there are not perfectly legitimate uses for it.

As an example, if I want a list of blogs to network with, I can pull out a list of referring domains from an SEO data tool, then use ScrapeBox to quickly pull their title tags and meta description so that I can evaluate the list faster and filter out foreign sites.

It’s all about how you use the tools.

Google is AI

It’s not AI, and it’s not aliens either.

It is extremely complicated and is strewn across multiple data centers all over the planet, but it’s not artificial intelligence.

One could argue that one of it’s newer features, called Rank Brain, could be considered AI.

Personally, I wouldn’t go that far, but it definitely does use machine learning.

In the sense that in a video game, the enemy monsters have AI, okay sure maybe Rank Brain is AI in that sense, but I assure you, it’s very limited compared to what you might be thinking.

It’s not going to transform into SkyNet and take over the internet, so stop freaking out okay?

Backlinks Don’t Do Anything

This is a little different than the myth that you don’t need links to rank on competitive queries, these people think that links do not affect their rankings at all.

I’ve had multiple people tell me over the years that I was totally insane, the SEO tools were all scams, and that links do absolutely nothing.

One person, in particular, had an ancient website that had an absolute ton of links pointed to it. I admit the number of links was impressive.

They said they had been building links for years and absolutely nothing they did worked.

The problem was that their site was garbage and was likely penalized and their links were pretty much all garbage as well.

Yeah, if your site is penalized, links don’t do anything. 🙂

WordPress Sucks for SEO

Actually yeah, straight out of the box it does kind of suck.

But, you can quickly set up a plugin (I recommend the All In One SEO Pack plugin, that’s actually what I use on this site) and for the most part, your site should be fine.

There are also some other things that I suggest that you do:

Pick one: Pages or Posts and use that exclusively (I use Pages.)

Don’t use archive pages; these are the pages that are automatically generated for the months, categories, tags, and authors. It’s perfectly fine to have pages (or posts) to represent those things, but you want unique content on those pages rather than the excerpts.

There’s a bunch of other similar myths like “WordPress is slow,” just install W3 Total Cache, don’t use super bloated plugins and you’ll be fine if you have a decent web host.

I’m not going to get into all of these, but I’ve encountered the “WordPress haters” a bunch of times. One person even admitted to being a developer for different web applications and claimed to charge $100 an hour to do development work.

So obviously that person has a vested interest in “hating on WordPress” since not many people are going to be okay with paying somebody $100 an hour to do something that they can probably find on a blog somewhere.

It’s Perfectly Safe to do it Because Somebody Else is

For the most part, this is the logic of every blackhat SEO.

Since their friend on some forum isn’t getting penalized (or arrested in some cases), they won’t either.

Never mind the fact that this stuff is extremely sketchy and that the specific things that cause the animal algorithms to attack are probably going to come down to a handful of links or a handful of pages of content.

Now, this advice is particularly awful.

On a particular forum that I would never mention here, I once read advice that went something like this; “If they let their domain expire, they are giving up their content, and you can use it.” There were people responding with comments like “oh okay cool!”

Yeah, don’t bother asking a lawyer, just do what some random person a forum said to do. Great plan… /sarcasm

Never mind that this is theft and the registrar has absolutely zero authority over the copyright and has no ability to legally transfer it to anyone.

So there’s a bunch of people right now, that have built up these private blog networks that consist of expired domains and content that they scraped out of archive.org.

From a legal perspective, this is hilariously stupid.

The same website that they used to copy the content out of, is now permanent evidence of them stealing that content and all of those sites are linking directly to their site, where they are trying to make money.

They can’t really hide what they’re doing here; there’s a direct paper trail leading straight to them.

It’s like a reverse distributed denial of service attack against themselves; they’re just asking for some clever lawyer to form a class action lawsuit against them.

If you ever come across somebody admitting to doing this and they want to teach you how to do it, I suggest you run and fast.

I’ll admit that it works and it will help your rankings (until Google catches you), but is it really worth it?

Content is King

Honestly, this one exists because of people factors and not because of how the algorithm works, but it’s true to a certain extent.

It’s simple, how is anybody going to convince a person to link to their content if it’s pure garbage? You really can’t.

There’s one thing here that most people don’t realize, Google will happily rank blank pages. Don’t believe me? Google: “Blank PDF”

Now, one time I tried to explain this to somebody and they said something like “well Google wants to serve the user’s search intent.”

Right, here is the problem with that, the blank PDF that is number one in Google, also happens to have the most and best quality links pointed to it.

So much for content is king. 😠

It’s actually search-intent and how “link worthy” the content is.

You Need to Index Your Website

There’s no point in indexing your website.

Google will find it when it follows a link to it and since the age of links is a factor, that I’ve observed to be pretty significant, ramming the site into Google’s index will not help.

Just build the site out as usual, and when it earns a quality link, Google will crawl it shortly afterward.

Note: For most of my sites, Google usually somehow finds it anyways. I don’t know if there’s a domain registrar that produces a list of domains registered on specific dates that GoogleBot finds or what.

If you want to add your sitemap to the Google search console, go right ahead, but it’s not going to help your rankings.

There is an Appropriate Amount of Exact Match, Naked, URL, and Brand Anchor Texts.

This myth usually comes from people in the blackhat SEO community who are using spam tools in a (sometimes pathetic) attempt to rank in Google.

The truth about that is that’s only one thing the penguin algorithm is looking at.

So outside of that context of link spam, this is just a myth.

It’s much more about the quality of the links, which is determined by the quality of the content the link is in, and the quality of the links pointed to that page. There are other link factors as well, like the number of outbound links on the linking page and its position within the page.

If the links are good quality, the tolerances for strange patterns in anchor texts seem to go up.

As far as the spammers go, the spam links their tools create likely meet none of the criteria for a good link anyways. Yet they try to make the links “look natural” by adding in a ton of variation.

I doubt that consistently fools Google these days since the most significant pattern is that it’s a bunch of junk links.

Comments On your Website Help Your Rankings

Wrong, comments on your website can actually hurt your rankings as they allow users to keep adding links to the page.

Now it’s true those links are nofollow, but your site will still lose authority for each nofollow link, but no authority will flow over the link.

Advanced explanation: The reason this occurs is due to the aggregate effect of authority flowing around your site over internal links, and since there are more outbound links, you are losing authority. Google was smart and realized that people could do link sculpting using the nofollow attribute, so to prevent this, nofollow links still cause authority to “bleed away.”

This may not be that big of a deal, as some people have also suggested that Google can figure out what sections of your website are comments and it ignores them.

I’ve seen evidence on both sides of that argument.

I’ve observed sites that were ranking (poorly) off followed links from comments. Note: the vast majority of comment sections on the internet are nofollow.

I also ran some tests where I tried to quote search text from the comment section of a website to see if it shows up in Google, and in most cases, it does not.

So it’s a lose-lose situation. The content in the comment section doesn’t usually show up in searches, but the links can bleed off your authority.

Not convinced? Many of the pages that I’ve personally seen that are ranking well on extremely competitive keywords have no comment section.

Try it, go search “cars” or “insurance,” I highly doubt any of the results in the top ten have comment sections (none did when I checked.)

Also, the comment section has to be maintained, or it can give the user the wrong impression about your site, especially if there are weird spam comments all over it.

Requiring a moderator to approve the comments first and then not approving any comments at all, is rude and can turn users off.

So this is a bunch of time being invested for no real benefit.

The last thing occurs if that page or site is extremely popular. There ends up being so many comments that it feels like you are going to break your mouse’s scroll wheel trying to get to the bottom of the page, this is certainly not a good experience on mobile.

Now, most of these issues can be resolved by using a comment system such as Disqus.

I’ve considered having comments on this site using a comment system, but I’ve observed an enormous amount of “SEO quackery” going on in the comment sections of many sites, and I don’t want to upset people by deleting their comments. Especially on a post like this, I know there would be dozens of butt-hurt people saying things like “NO KEVIN, YOU’RE WRONG! NOFOLLOW LINKS TOTALLY RANK PAGES!”

Yeah, I’ll pass on that.

The Quality of a Link is Determined by the Domain Authority

No, it’s the page authority.

Often, sellers of garbage links will talk about the DA being super high or that some of the links are on EDU domains.

The reality is that those sellers are likely offering an automated service that produces a bunch of junk links on pages that are either orphans (which are pages with zero page authority because no external links are pointing to that page) or the page authority is so low that it’s probably worthless on competitive keywords.

Now it is true that when doing things like prospecting work for outreach, the Domain Authority/Domain Rating metrics are often used to filter out targets that are a waste of time.

DA is also useful for things like roughly estimating a domain’s worth, but that’s a very rough estimation.

You Can’t do Guest Posts

This myth comes from a few confusing comments that Matt Cutts made and “purists” that believe that you’re only allowed to get links to a site completely “naturally.”

Yes, you can do guest posts, but if Google does a manual review of your backlink profile, they are likely to consider your intent when doing these guest posts.

If your intent was to market yourself or your brand, I doubt they’re going to care unless you did this at a scale where it becomes apparent that you did not personally write all of that content.

If you did things like optimize the anchor text and are consistently linking to something that isn’t either the index of your site or an author bio, well that’s going to look a little fishy.

If you did one guest post and linked to a page of content that already has dozens of links, I doubt that’s a problem. Especially if you are talking about a topic and use your own content as a resource. It also helps if you are being fair about this and are linking to other resources.

Another thing to think about is why somebody would want to do a guest post if the purpose of this wasn’t for SEO.

So, pretend the link will be nofollow, and there’s no link equity being passed to your site.

What’s the point of a guest post if the link is nofollow?

This is simple, highly authoritative websites have a lot of traffic and getting a post on that site can do wonders to improve your credibility, especially if your site is new.

So it’s perfectly valid to do guest posting for the purposes of getting traffic and developing your credibility, but if you do it, try to pretend that you are “SEO stupid.”

The anchor texts should either be your name, your brand, or are inviting a user to a page with a high click rate anchor text. Avoid using exact match anchor texts, where you use the keyword that you are targeting.

The biggest thing to consider here would be the number of guest posts you actually want to do and the quality of the sites you are getting them on.

It’s pretty difficult to get a guest post on a quality site, so if your goal is to exclusively do guest posts on high-quality sites, this practice really should be fine regarding the quantity.

Also at some point, it gets to be a really poor use of your time to keep having to write high-quality content for other people’s sites.

You can’t keep rewriting the same content over and over again as that would be a pretty clear indicator that your intent is SEO and it’s not likely to get approved on a high-quality site anyways.

If you’re still having trouble understanding what’s allowed; think about it this way. If you were allowed to write an article for a magazine and that magazine had an editorial team that would allow zero shenanigans, think about what the best approach for your article would be.

The Google Keyword Planner Data is Good for Keyword Research

Uh, no, it’s for Google Adwords users.

Just stop.

There are all kinds of things to consider when targetting a keyword.

The competition in Google Adwords should not be one of them, (unless you plan to use Adwords of course.)

Also, the CPC metrics are not necessarily a good indicator of how much you can earn per click from that keyword, and it doesn’t represent the value of the organic traffic (I know I’m going to get crucified for this one if I don’t explain myself.)

Simply put: the pages that rank well in the organic rankings are not necessarily good at converting visitors into buyers, and the opposite is also true for landing pages used by Adwords marketers (as far as earning links.)

I recently observed a “professional SEO” suggesting that they are saving their clients money based upon the amount of money the traffic would likely cost in Adwords, uh that’s not how that works at all…

Organic traffic is traffic; it does not save “Adwords spend.”

Some would say that you can use the traffic metric in the keyword planner, and I guess you could, but quality tools provide all kinds of other information that should be considered as well.

Such as click-stream data, information about the SERP features, and an estimation of how difficult it would actually be to rank on that keyword, which isn’t a statistical analysis, the professional tools actually analyze the links pointed to those pages.

If you’re going to rely exclusively on the planner, I would suggest to you that your competition likely isn’t and this puts you at a competitive disadvantage.

White Hat SEO is Easy

It’s certainly easier than it used to be since there are a lot of quality tools available to help the process go more smoothly, but it’s not an easy process at all.

It’s actually a lot of work, between crafting the perfect piece of content to attract links, to endless hours of prospecting and outreach marketing, I would tend to think it’s actually pretty difficult.

The thing though is, since White Hats generally only use techniques that (theoretically) should be safe from Google penalties, that the rankings and links can accumulate over time (usually years), leading to a large site that gets a ton of traffic.

Once the site reaches a certain size point, some websites almost grow themselves by just posting quality content due to the amount of traffic it receives. Tip: It really helps if that site has a large audience on social media and has built an email list.

You only Want Links from Sites that are Relevant

You want links from relevant content, not exclusively relevant sites.

It actually doesn’t matter if most of the content on the site is irrelevant, but it can look bizarre if the rest of the site is almost exclusively irrelevant. This might trigger an “unnatural links” manual action in certain situations.

I remember reading about a company that hired mommy bloggers to write articles about a topic that would be pretty strange for a new mother to write about on their blog.

I’m not saying what it was, but the topic was something like survival knives. Why on earth would a mommy blogger be reviewing survival knives?

A better question is, why would one hundred of them do that?

Obviously, this was some kind of link scheme and is definitely not allowed.

But, if you get a link from an authoritative site and there’s a good reason for it, it’s okay. This is especially true in the case of news media sites; obviously, the vast majority of the content on that site is not relevant to yours, but it’s a great link.

The Longer the Content is, the Better it Ranks.

Well, it’s true that a longer piece of content has a lot more perceived value and may attract more links, but I’ve seen no evidence that it will actually rank better on its target keyword.

Now it’s true that a longer piece of content may receive more traffic from Google (from related long tail keywords) as it has a lot more content on that page, which people might be searching for.

So the reason that people believe that longer content is better has more to do user perception than actual ranking benefits on the target keyword.

Now you might be thinking “Sure thing Kevin the Wizard, nice 10,000-word piece of content dude…“

It’s just because I wanted all of this nonsense on one page…

Linking Out Hurts Your Rankings

Well, it can if you link out excessively, but generally speaking, there are enough internal links on most sites to mitigate most of the issue.

Also, since it appears that Google considers links to be content, linking out seems to actually help improve a page’s rankings in many cases.

The problem mainly arises for sites that are set up like a directory site where every single page on their site has dozens of outbound links.

If you are concerned about this, try to consolidate your outbound links onto fewer pages and avoid linking out on your index and category pages.

Really though, it’s more about the external links pointed to your site, not the links on your site that link out.

As I already explained, comments can be bad in the sense that each comment could result in an outbound link.

Tip: Please don’t link to Wikipedia, unless your site is educational or encyclopedic in nature, or the link is a citation. I’m pretty sure that everybody knows about Wikipedia, so those links are not very helpful to your users. It’s like when a person asks a question and somebody responds with “you can Google that.” Yeah, no kidding. Most people were probably looking for an insightful answer that draws upon personal experience, rather than hoping the result they get from Google is accurate. Typically when I link out to something, it’s because I personally use it, or recommend it, so my links are providing value to my readers. I don’t recommend that you go read an encyclopedia, it’s probably not the best use of your time.

You Need Perfect HTML

As a general rule of thumb, if Chrome will render the page correctly, I’m sure it’s okay.

Since HTML standards tend to change over time, it doesn’t make sense for Google to penalize sites because their code isn’t perfect.

The main thing to be worried about would be whether the page renders correctly.

You Should use an Indexer on your Backlinks

This is yet another myth from the blackhats.

If the links are junk, it’s probably better if Google doesn’t find them.

If they are quality, Google will find them and factor them in.

The schedule for Googlebot to recrawl the content is determined by the crawl budget and the settings in the sitemap. So Google should find them, have some patience okay?

You’re better off doing outreach or promoting your blog post on social media.

You Must Have SSL

I honestly think that for all new sites, you should get an SSL certificate from LetsEncrypt or use the certificate that is provided by Cpanel.

But, I’ve seen many cases of sites migrating from HTTP to HTTPS that actually get hit with a temporary dip in their organic traffic.

Google does seem to handle this relatively well, but it can take a little while for things to even out.

After migration to HTTPS, your site should 301 redirect all nonsecure URLs to their secure versions.

There’s a potential that this could get screwed up by whoever is editing the .htaccess file and that’s not good at all. So make sure that whoever is doing your migration is knowledgeable.

If the site is purely a blog and doesn’t collect user data in any way (even analytics), then it’s not necessary, and the migration will probably hurt more than it helps until Google settles down, which usually takes a few weeks if this was done correctly.

Emoji Links in Comments are a Rank Hack

This one comes from a particularly “special person.”

I’m sure they thought that they were an absolute rocket scientist when they figured this “rank hack” out.

Okay so here’s how this “works.” /eyeroll 🙄

If you use an emoji as the anchor text of a link, the little line under the link doesn’t appear.

This makes it very difficult for a moderator to catch the fact that there’s a spam link in the comment.

So this person was spamming comments all over the internet and ramming a link back to their site in the comment with an emoji as the anchor text.

Cute trick!

Too bad the links are nofollow and if there was an award for “world’s most unnatural backlink profile,” that would definitely win. Just above using a period as the anchor text, which people used to do all the time around the year 2000, and that didn’t work so well back then either.

At least, back then the period trick kind of worked and the emoji technique does not.

A Site With More Pages is Better Than a Site with Less Pages

This really depends more so on the ratio of external links (if they are quality) to the number of pages.

For most people, a smaller site is generally better as it allows them to focus on creating quality content rather than quantity of content.

One thing I think people also forget is that content needs to be maintained. Just changing the publish date on it is not “maintaining it.”

The structure of the internal links is also pretty critical here, but if you use the “silo structure” and you flood your site out with pages, then only the pages that have external links pointing to them will rank well on competitive keywords.

I’ve seen some sites that are completely flooded out with junk content and really need a “content haircut.” Simply put: if there’s no benefit to having the piece of content on the site, then just delete it.

It’s Okay if Your Site is Slow

Uh, definitely not. Google seems to prefer sites that load quickly.

Now you don’t have to be neurotic about this, but site speed is certainly a real factor.

It’s also terrible for the user experience, and most people have a massively bad case of “internet induced ADHD” these days. So they’re not going to stick around waiting for your website to load.

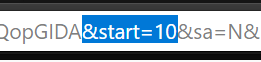

Duplicate Content Penalty

There is no duplicate content penalty, if it’s a duplicate piece of content on your site, it will show up in the supplemental index of Google and nowhere else.

How to find the supplemental index:

Note: This will not work if your site has more than about 550 pages of content, you will have to add a modifier to the search query (a keyword) and use a spreadsheet to collect all of the data together.

I’m getting one of those nervous ticks just thinking about this. Have fun if your site has like 10,000 pages of content.

You need to go to the very last page of Google’s index for your site.

How to navigate to the last page of the Google’s visible index:

Search “site:yoursite.extension”

Immediately click to next page of results in Google.

Now you need to edit the Google URL.

In the URL bar at the top of your browser, there is a part that will say “start=10” or “start=100”, change the number to 550, so “start=550” then press enter.

That should take you to the last page, but you may need to click forward a few more pages.

Once you’re on the last page, at the very bottom it will say :

“In order to show you the most relevant results, we have omitted some entries very similar to the ### already displayed. If you like, you can repeat the search with the omitted results included.“

Click that link and compare the results, you will likely need a spreadsheet for this.

There are other ways to do this that are faster, but I’m not going to get into that now.

SEO Traffic is Free

Now, it’s true that organic traffic is free in the sense that you don’t have to pay per click for it.

But, it certainly takes time to get rankings, and it takes even more time to maintain those rankings.

Not many people that I talk to think that their time is free unless it’s the time they spend with their family.

Exact Match Domains are Better

Google doesn’t seem to care too much about exact match domains these days, and it’s very easy to have issues with the anchor texts from quality links getting accidentally over optimized.

Honestly, a brandable name is better choice for a domain.

All Comments Links are Bad

That’s definitely not true; the nofollow link attribute was created to fight spam, this does not mean that all comment links are automatically spam.

If you leave an intelligent comment on a site, you can certainly leave a link back to your site.

If the moderator of the site has a problem with that, well then your comment won’t get approved. Oh well.

Now, that link isn’t going to provide any benefit to your site’s rankings, but it could send some traffic.

Blogging Helps your Rankings

That really depends on what you do with your blog.

If you’re posting a biography of your life and you’re not a noteworthy person, it’s probably not going to work.

If your blog has quality content that attracts links, then it could, but many people are stuck in the mindset that all they need to do is post to their blog to rank.

That’s just not true.

It’s All about the Long Tail

There are plenty of easy rankings in the long tail, but you shouldn’t focus exclusively on long tail keywords.

At some point, your site is going to be flooded with content, and that’s not good either unless that content is benefiting the site in some way.

Now I’m not suggesting that you should target suicidally difficult keywords, but since Google understands topics, you should be targeting the right keywords with your content.

If you find lower competition topics, that’s great, but targeting a keyword with a lower competition level because it’s “a longtail” typically doesn’t pan out that well these days.

Guaranteed Rankings

If a person is claiming that they can guarantee rankings, run.

What’s probably going on is they’re going to do some “work” and then point out that they ranked your site on a bunch of keywords that get little to no traffic.

This isn’t difficult to do.

Generally, all pages will rank on page one for some kind of keyword variation, especially if the name of the topic has a lot of words in it, or contains a strange combination of words.

I could easily take any page on the internet (that isn’t penalized) and produce a giant list of things to type into Google to find it.

It’s the Domain Age

This one just won’t die, it more about the age the links pointed to the content.

It makes absolutely no sense what so ever that the age of domain would matter.

If that was true, you would find ancients sites dominating the SERPs, and you’re probably not going to find any that are not well maintained.

Your Site’s IP Address Doesn’t Matter

It definitely does, I always prefer to have my sites on a static IP address with no other domains on them.

If there’s a bunch of garbage spam sites on the same IP address, this can hurt your rankings.

If you are on one of those cheap web hosts, you may want to consider performing a reverse IP lookup on your site’s IP address to make sure there’s not a bunch of weird spam sites on the same IP.

I’ve also heard of Google penalizing IP addresses because there were too many spam sites on that IP. This is known as a bad neighborhood, and I’ve heard that some IPs are blacklisted because of spammers. Maybe that penalty goes away at some point; I have no idea. I would just avoid it.

There’s Some Magic Ratio Between Nofollow and DoFollow

The nofollow links don’t count so no.

Under normal circumstances, there is no benefit and they don’t hurt.

This is another one of these “we did a correlation test and found something” type of things.

You certainly do not need to be building nofollow links to “fix the ratio.”

You Should have a Finished Website Before trying to Market it

There’s no point in waiting. Once you have your first piece of good quality content, start marketing it.

By waiting, you’re actually slowing down the process of earning rankings.

Just make sure the content that you have on your site is quality.

You may need to spend some time thinking about how you are going to structure your site beforehand, to account for growth later.

This is because you want to avoid moving content from one URL to another.

It’s true that you can do a 301 redirect, but ideally, you should just avoid that.

So some people want to start an e-commerce site, and they think it’s a good idea to start the blog and get some links to the blog content before opening the store. I agree I think that is a great plan.

So you will have to consider what URLs the blog content will be on when you migrate to that e-commerce host.

If you’re not sure, put the blog on the “blog” subdomain or talk to the representatives at the e-commerce host to figure out where the blog content will eventually end up.

You need to Improve your Alexa Rankings

Who cares about your Alexa rankings?

I’ve seen this one a lot; I think there’s a job employment site that asks a question about this or something because there’s just way too many people who want to know how to improve their Alexa rankings…

Alexa has absolutely nothing to do with Google.

Also, I’ve observed many sites that when they get into the top one million of Alexa, start getting hit with a massive amount of weird links from strange scraper sites.

I doubt that helps much and if your site has very few quality links, it might actually trigger a Penguin penalty, but I kind of doubt that.

Posting Regularity Improves your Rankings

Google doesn’t actually care about how regularly you post new content to your site.

Now, your readers might, and regular posting might help get more traffic to your site, which might in turn, earn more links because of regular posting.

So having a regular posting schedule is not a bad thing, but Google doesn’t care either way.

You should Disavow your NoFollow Links

LMAO… 🤣

Why? What for?

Do you just want to make sure Google doesn’t care about them or something?

I’m not sure if this person was serious, hopefully not.

Your Website Should only Earn Links Slowly.

I’ve seen plenty of corporate level sites earn links quite quickly and it did not seem to matter.

Now, those were quality sites, and they were earning quality links.

So if you are an individual blogger and you are just getting started, it might be really odd for your site to suddenly get an influx of links.

That might trigger a manual review and if the site is junk, or the links are garbage, you might get a manual action. Pretty unlikely though if the links are quality.

The reason they might review your site is that it would be very “unnatural” for that to happen, excluding going viral and that’s obviously a good thing.

As a rule of thumb, as an individual, it’s pretty difficult to earn links “too fast.” It just takes too long to produce great content and market it.

NoFollow Links are Ranking Sites!

Story Time: This one person was hysterical, (name withheld to protect the innocent.)

They were suggesting that the Google shortener links were actually “converting” nofollow links into followed links and they were “getting really good results.”

No, the links were clearly nofollow and they were probably observing improved rankings from their links aging and Google trusting their site more.

Google removed their link shortening service, so that part of this myth is dead, but I’ve seen some extremely respectable SEOs suggest that nofollow links can indeed influence a site’s rankings.

Every time this happens, I make a note of it, then check the site a few weeks later with tools. In almost every case, sure enough, there were followed links pointed to the site that would explain the rankings, which the tools had not found when this person made the claim.

Also, the Google Search Console does not always show you every link to your site, so you can’t go off that either in these situations where it appears a site is “ranking off nofollow links.”

The last case where this can happen is when a site is using a private blog network, and those sites are configured in such a way where they are basically hidden from the SEO tools. When this is occurring the site appears to have “ghost rankings,” it’s like it’s ranking off links that are not there. The reality is that they’re there, you just can’t see them. Tools like Moz and Majestic are starting to fill in these “data gaps.”

Directory Links are a Great Idea!

Not so much anymore, sorry. Many of these directories are filled with garbage and are poorly maintained.

There are some quality “niche directories” around that might be worth getting a link from, but because these sites have extremely high amounts of outbound links, I wouldn’t put too much effort into this.

Honestly, I recommend you skip directory links entirely unless it’s a website for a local business.

Black Hat SEO Doesn’t Work

It’s true that the tolerances for spam are much much lower than they used to be, but I can still find a few sites that are clearly ranked off spam.

So yes, it does still work (kind of.)

That doesn’t make it a good idea.

Negative SEO is Fake News

No, it’s definitely a problem that can easily be mitigated by carefully watching your site’s backlink profile and disavowing those links.

As a general rule of thumb, since it generally takes about a month for links to start to significantly help a site’s rankings, I would suggest checking your site’s backlink profile about twice a month.

Understand though, that there’s a difference between sites that automatically create links out to other sites and spam links.

The spam is the problem; the automatic links are likely okay as long as your site has quality links.

It’s all about ratios.

Anything To Do With Authors Or Authorship

It’s dead, move on. Authorship can easily be manipulated with ghostwriters, and I doubt it will ever be back.

Some people were suggesting that with the recent E-A-T (Medic) update that it might somehow be related to authorship. I personally don’t think so; I think it has more to do with the fact that the sites that were hit the hardest had questionable content with claims that were hard to believe.

All Forms of Link Building are Blackhat, and you Must Get All of Your Links “Naturally.”

This is not true; Google doesn’t care if you market your website in an effort to earn links. They care about spam and clear attempts to manipulate the algorithm.

There’s a big difference between “earning links” and spam.

I call the people who believe this myth “purists” and they definitely need rethink this.

I’m not suggesting that you must do email outreach marketing, but you need to do some kind of networking.

It’s not difficult to make a list of bloggers in your niche and try to network with them on social media, they probably have links to their social accounts right on their website…

Your Site Can’t Have any Broken Links

It really shouldn’t as broken links might be an indicator of low quality (it’s certainly a bad user experience), but a few broken links here and there are not likely to be a problem, so neurotically checking your site for broken links is probably not going to help.

It’s probably worth checking in a tool once or twice a month.

The Title Tag Should be :Insert Random Number: of Words in Length

The titles are actually based upon the number of pixels, not the number of words.

Google Hates SEOs

That really depends on what kind of SEO they are.

Google does hate spam and people who manipulate their search engine.

As long as you understand this, you should be fine:

Links are like votes and should be purely editorial in nature.

It should be up to the owner of the website (or author) to choose to link to your site.

If you’re buying links or somehow forcing people to create them, that’s not okay.

That also includes things like footer links in free WordPress themes and plugins, those links should be nofollow.

Google Uses Hashtags as a Ranking Factor

How would this even work?

Okay so let me get this straight, so Google gives Facebook and Twitter a call on the phone and says “Hey everyone what’s going on? We need all of your hashtags, send them over now.”

Considering that these companies are all competitors, this is utterly ridiculous.

Now, I’ve seen some sites use hashtags for category descriptions and Google honestly just seems to ignore the pound symbol.

If you use a hashtag as a search query, Google will usually serve a page back to you with a Twitter SERP feature.

Also, many people use hashtags in a way that is super spammy, so I have no idea why Google would care about that.

As far as using hashtags in content, I guess there’s only one way to find out right?

#SEOMyths #RankMyContent

Yeah, I’m doing it! Straight to number one baby! 😍

The Disavow Tool is Useless.

I once had a person tell me that the disavow tool is a “hamster wheel.”

No, since the Penguin 4 update, it’s pretty clear that it works.

I admit that before, it was likely more of a sign of “good faith” during manual reviews since Penguin used to update so infrequently.

You should include all spam links to your site in the disavow tool.

You only Want one Link from Each Domain

This is a myth that comes from email outreach marketing.

The concept is simple, if a site linked to you, you don’t want to upset that site owner by contacting them “too much” and have them remove all of your links.

Outside of that context, it doesn’t matter. Each quality link will provide some value.

As far as outreach marketing, this is simple, don’t piss off your contacts. They’re people that you’re trying to build a relationship with.

If you got the link by sending them an email that was generated with some kind of automated tool, you’re probably not going to get on their good side by sending them more email templates, so you should probably take your link and run.

They’re probably going to figure out that it was some kind of automated email the second time they get it. Most people are probably going to feel kind of weird when they realize they linked to a website because a robot sent them an email.

You Need AdWords to Rank

Yes, Google is evil, if you don’t buy their ads, you won’t rank. /sarcasm

Never mind that there are at least a million of examples of that not being true…

Everything Matt Cutts Said is True

You have to understand that it wasn’t Matt Cutts job to explain how to manipulate rankings or explain how the core algorithm works.

The information he gave was very informative, but I would take some of the things he said with a grain of salt.

Specifically, I remember him describing how the order of the links matters on a page. Now I do think that the order of the links does matter, but his explanation didn’t seem to be consistent with reality.

There was the whole guest posting fiasco as well.

XML Sitemaps Improve your Rankings

Only in the case that Google is having trouble indexing your content for one reason or another.

You don’t actually even need a sitemap, but I recommend it.

This is especially true if your site is small, Google will probably be able to crawl your whole site pretty quickly.

It has to be a .com, or you Won’t Rank

No, Google will rank any of the domain extensions just the same.

Now there might be issues here with user perception, so a .com domain might have a slightly higher click rate in the search results.

That’s very possible.

Correlation Data is Meaningful with Small Data Sets

I don’t want to get into this myth too much, but there are a lot of people that seem to think that you can gain insight at what is currently working by analyzing a handful of search results.

It’s meaningless; you’re staring at patterns in chaos. Much of this stuff represents trends in content creation and has absolutely nothing to do with the algorithm.

There are also going to be patterns in different niches as people have a tendency to copycat each other.

SEO is Dead

Only to the people that can’t do it.

Some things have changed, but as far as I can tell, the only thing that’s dead is spam and low-quality sites.

Debunking SEO Myths Is Not Scalable

Actually, this one might be right, since it came straight from John Mueller.

I think I got most of them. I already know there’s a few I skipped (things like meta tags), but that stuff is covered elsewhere.

And as long as there are “gurus” teaching complete nonsense, there will always be demand for somebody to debunk it.